Overview

DeepSeek-V3 is a newly released open-source Mixture-of-Experts (MoE) language model with 671B total parameters. It activates 37B parameters per token and supports context lengths up to 128K tokens. This post highlights its main features, performance, and training details.

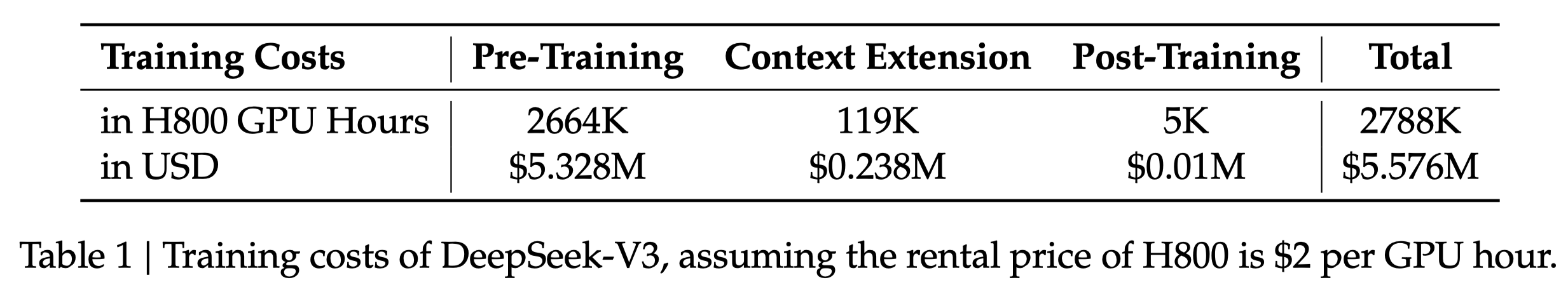

Remarkably, its training costs were around $5.576M, while it remains competitive with state-of-the-art frontier models.

DeepSeek models are now available in PySpur.

Performance & Benchmarks

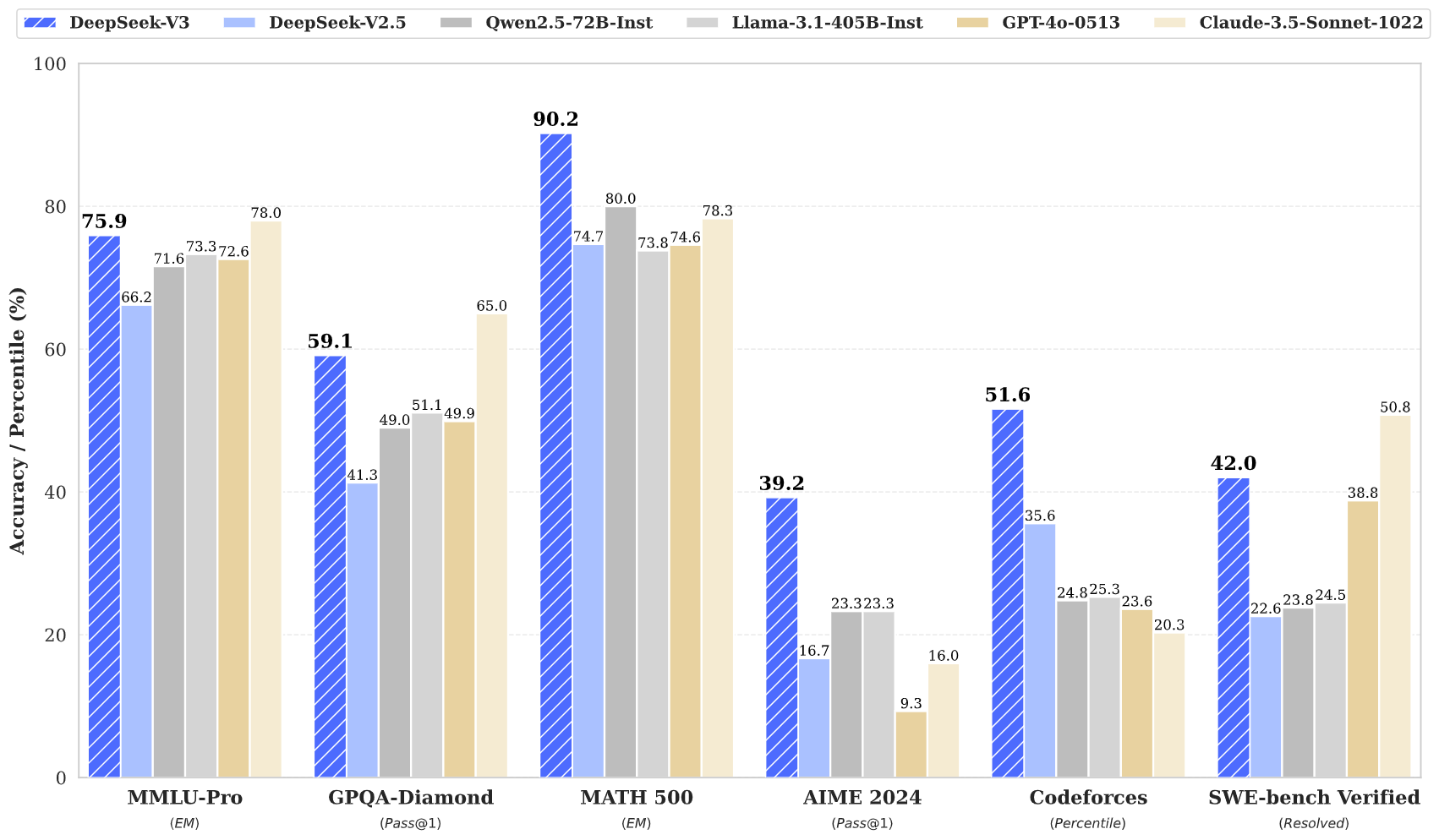

Let's start with the most important question: how well does it perform on common benchmarks?

DeepSeek-V3 reportedly surpasses many state-of-the-art open-source and closed-source frontier LLMs on tasks involving math, code, and multilingual understanding.

Training Cost & Deployment

Despite its large size, DeepSeek-V3 was trained on 14.8T tokens at an estimated 2.788M H800 GPU hours, which is noted as very efficient for a model of this scale.

Insiders speculate it was trained with 1-2 orders of magnitude less compute than previous frontier LLMs.

- Hardware: 2048 NVIDIA H800 GPUs

- Training Framework: Custom pipeline parallelism (“DualPipe”) plus efficient cross-node all-to-all kernels

- Context Length: Extended to 128K tokens using YaRN-based methods

Deployment strategies rely on 4–40 nodes to balance throughput with service-level objectives. Smaller-scale deployments may require advanced optimizations or partial-parameter techniques.

Technical details

-

Mixture-of-Experts (MoE) DeepSeek-V3 employs an auxiliary-loss-free strategy for load balancing—avoiding the performance penalties seen in traditional MoE models that rely on large auxiliary losses.

-

Multi-Token Prediction (MTP) The model simultaneously predicts multiple future tokens in training. This approach potentially improves data efficiency and paves the way for faster inference through speculative decoding.

-

Training with FP8 Precision The developers introduced FP8 mixed precision for large-scale training. They also used a DualPipe pipeline parallelism algorithm to overlap compute and communication steps.

-

Knowledge Distillation The model aligns with human preferences via Supervised Fine-Tuning and Reinforcement Learning, including knowledge distillation from earlier specialized versions (DeepSeek-R1).

Conclusion

DeepSeek-V3 stands out for its large scale (671B parameters), MoE-based efficiency, and 128K-token context length. It appears to compete with higher-end closed-source models on code and math benchmarks while maintaining cost-effectiveness in training. The checkpoints and implementation details are available under an open-source license.

Developers and researchers interested in self-hosting DeepSeek-V3 can find inference resources on its official GitHub repository.

For developers looking to leverage the cloud-hosted DeepSeek-V3 API, we invite you to explore the PySpur repository for seamless integration and experimentation with the model.